11. References

11.1. Assembly and contigs related

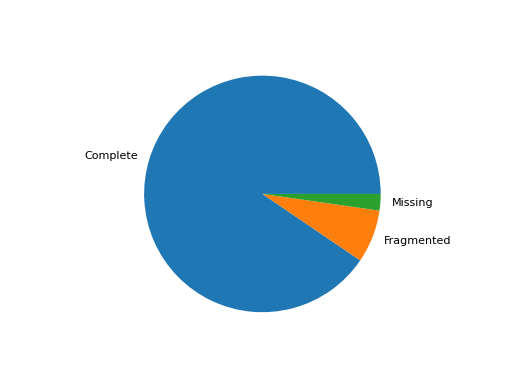

- class BUSCO(filename='full_table_test.tsv')[source]

Wrapper of the BUSCO output

"BUSCO provides a quantitative measures for the assessment of a genome assembly, gene set, transcriptome completeness, based on evolutionarily-informed expectations of gene content from near-universal single-copy orthologs selected from OrthoDB v9." -- BUSCO website 2017

This class reads the full report generated by BUSCO and provides some visualisation of this report. The information is stored in a dataframe

df. The score can be retrieve with the attributescorein percentage in the range 0-100.- Reference:

Note

support version 3.0.1 and new formats from v5.X

constructor

- Filename:

a valid BUSCO input file (full table). See example in sequana code source (testing)

- pie_plot(filename=None, hold=False)[source]

Pie plot of the status (completed / fragment / missed)

from sequana import BUSCO, sequana_data b = BUSCO(sequana_data("test_busco_full_table.tsv")) b.pie_plot()

(

Source code,png,hires.png,pdf)

- save_core_genomes(contig_file, output_fasta_file='core.fasta')[source]

Save the core genome based on busco and assembly output

The busco file must have been generated from an input contig file. In the example below, the busco file was obtained from the data.contigs.fasta file:

from sequana import BUSCO b = BUSCO("busco_full_table.tsv") b.save_core_genomes("data.contigs.fasta", "core.fasta")

If a gene from the core genome is missing, the extracted gene is made of 100 N's If a gene is duplicated, only the best entry (based on the score) is kept.

If there are 130 genes in the core genomes, the output will be a multi-sequence FASTA file made of 130 sequences.

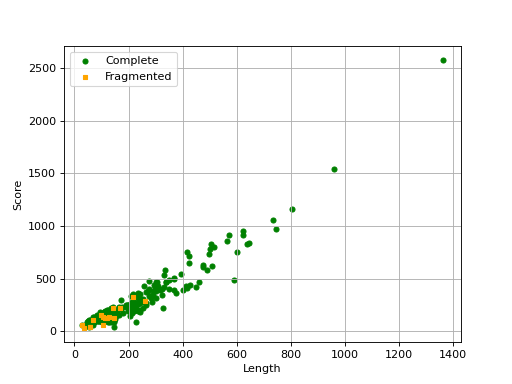

- scatter_plot(filename=None, hold=False)[source]

Scatter plot of the score versus length of each ortholog

from sequana import BUSCO, sequana_data b = BUSCO(sequana_data("test_busco_full_table.tsv")) b.scatter_plot()

(

Source code,png,hires.png,pdf)

Missing are not show since there is no information about contig .

- property score

- class Contigs(filename, mode='canu')[source]

Utilities for summarising or plotting contig information

Depending on how the FastA file was created, different types of plots can be are available. For instance, if the FastA was created with Canu, nreads and covStat information can be extracted. Therefore, plots such as

plot_scatter_contig_length_vs_nreads_cov()andplot_contig_length_vs_nreads()can be used.Constructor

- Parameters:

filename -- input FastA file

canu -- tool that created the output file.

- property df

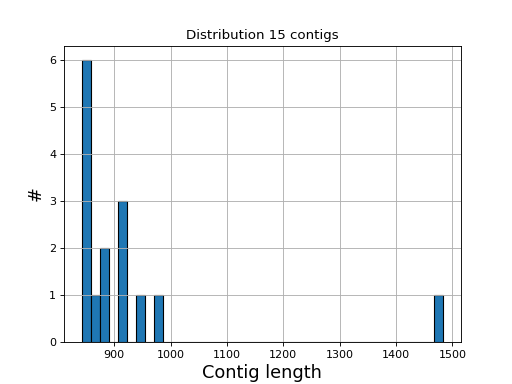

- hist_plot_contig_length(bins=40, fontsize=16, lw=1)[source]

Plot distribution of contig lengths

- Parameters:

bin -- number of bins for the histogram

fontsize -- fontsize for xy-labels

lw -- width of bar contour edges

ec -- color of bar contours

(

Source code,png,hires.png,pdf)

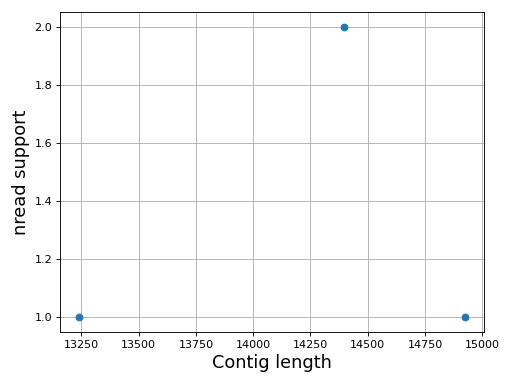

- plot_contig_length_vs_nreads(fontsize=16, min_length=5000, min_nread=10, grid=True, logx=True, logy=True)[source]

Plot contig length versus nreads

In canu, contigs have the number of reads that support them. Here, we can see whether contigs have lots of reads supported them or not.

Note

For Canu output only

(

Source code,png,hires.png,pdf)

- class ContigsBase(filename)[source]

Parent class for contigs data

Constructor

- Parameters:

filename -- input file name

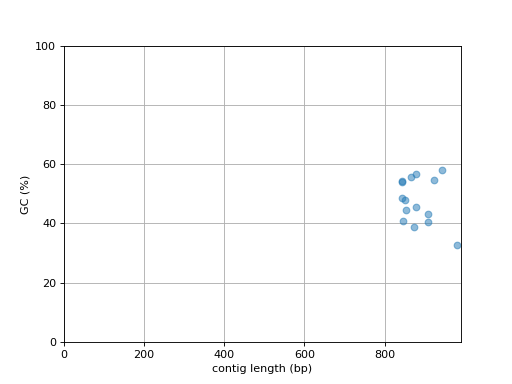

- plot_contig_length_vs_GC(alpha=0.5)[source]

Plot contig GC content versus contig length

(

Source code,png,hires.png,pdf)

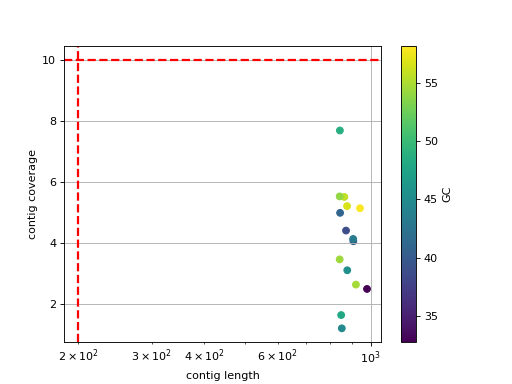

- scatter_length_cov_gc(min_length=200, min_cov=10, grid=True, logy=False, logx=True)[source]

Plot scatter length versus GC content

- Parameters:

min_length -- add vertical line to indicate possible contig length cutoff

min_cov -- add horizontal line to indicate possible coverage contig cutff

grid -- add grid to the plot

logy -- set y-axis log scale

logx -- set x-axis log scale

(

Source code,png,hires.png,pdf)

11.2. BAMTOOLS related

Tools to manipulate BAM/SAM files

|

Helper class to retrieve info about Alignment |

|

BAM reader. |

|

CRAM Reader. |

|

Convenient structure to store several BAM files |

|

SAM Reader. |

|

Utility to extract bits from a SAM flag |

|

Base class for SAM/BAM/CRAM data sets |

Note

BAM being the compressed version of SAM files, we do not implement any functionalities related to SAM files. We strongly encourage developers to convert their SAM to BAM.

- class Alignment(alignment)[source]

Helper class to retrieve info about Alignment

Takes an alignment as read by

BAMand provides a simplified version of pysam.Alignment class.>>> from sequana.bamtools import Alignment >>> from sequana import BAM, sequana_data >>> b = BAM(sequana_data("test.bam")) >>> segment = next(b) >>> align = Alignment(segment) >>> align.as_dict() >>> align.FLAG 353

The original data is stored in hidden attribute

_dataand the following values are available as attributes or dictionary:QNAME: a query template name. Reads/segment having same QNAME come from the same template. A QNAME set to * indicates the information is unavailable. In a sam file, a read may occupy multiple alignment

FLAG: combination of bitwise flags. See

SAMFlagsRNAME: reference sequence

POS

MAPQ: mapping quality if segment is mapped. equals -10 log10 Pr

CIGAR: See

sequana.cigar.CigarRNEXT: reference sequence name of the primary alignment of the NEXT read in the template

PNEXT: position of primary alignment

TLEN: signed observed template length

SEQ: segment sequence

QUAL: ascii of base quality

constructor

- Parameters:

alignment -- alignment instance from

BAM

- class BAM(filename, *args)[source]

BAM reader. See

SAMBAMbasefor details

- class CRAM(filename, *args)[source]

CRAM Reader. See

SAMBAMbasefor details

- class CS(tag)[source]

Interpret CS tag from SAM/BAM file tag

>>> from sequana import CS >>> CS('-a:6-g:14+g:2+c:9*ac:10-a:13-a') {'D': 3, 'I': 2, 'M': 54, 'S': 1}

When using some mapper, CIGAR are stored in another format called CS, which also includes the substitutions. See minimap2 documentation for details.

- class SAMBAMbase(filename, mode='r', *args)[source]

Base class for SAM/BAM/CRAM data sets

We provide a few test files in Sequana, which can be retrieved with sequana_data:

>>> from sequana import BAM, sequana_data >>> b = BAM(sequana_data("test.bam")) >>> len(b) 1000 >>> from sequana import CRAM >>> b = CRAM(sequana_data("test_measles.cram")) >>> len(b) 60

- bam_analysis_to_json(filename)[source]

Create a json file with information related to the bam file.

This includes some metrics (see

get_stats(); eg MAPQ), combination of flags, SAM flags, counters about the read length.

- boxplot_qualities(max_sample=500000)[source]

Same as in

sequana.fastq.FastQC

- get_df_concordance(max_align=-1, progress=True)[source]

This methods returns a dataframe with Insert, Deletion, Match, Substitution, read length, concordance (see below for a definition)

Be aware that the SAM or BAM file must be created using minimap2 and the --cs option to store the CIGAR in a new CS format, which also contains the information about substitution. Other mapper are also handled (e.g. bwa) but the substitution are solely based on the NM tag if it exists.

alignment that have no CS tag or CIGAR are ignored.

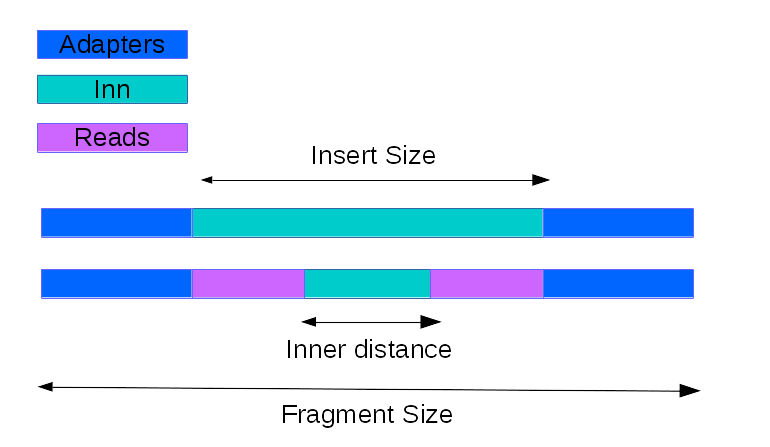

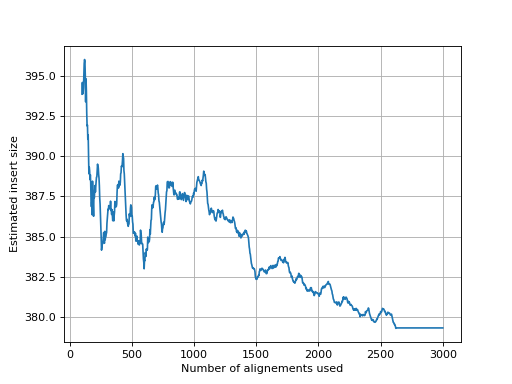

- get_estimate_insert_size(max_entries=100000, upper_bound=1000, lower_bound=-1000)[source]

Here we show that about 3000 alignments are enough to get a good estimate of the insert size.

(

Source code,png,hires.png,pdf)

- get_flags_as_df()[source]

Returns decomposed flags as a dataframe

>>> from sequana import BAM, sequana_data >>> b = BAM(sequana_data('test.bam')) >>> df = b.get_flags_as_df() >>> df.sum() 0 0 1 1000 2 484 4 2 8 2 16 499 32 500 64 477 128 523 256 64 512 0 1024 0 2048 0 dtype: int64

See also

SAMFlagsfor meaning of each flag

- get_mapped_read_length()[source]

Return dataframe with read length for each read

(

Source code,png,hires.png,pdf)

- get_samflags_count()[source]

Count how many reads have each flag of SAM format.

- Returns:

dictionary with keys as SAM flags

- get_samtools_stats_as_df()[source]

Return a dictionary with full stats about the BAM/SAM file

The index of the dataframe contains the flags. The column contains the counts.

>>> from sequana import BAM, sequana_data >>> b = BAM(sequana_data("test.bam")) >>> df = b.get_samtools_stats_as_df() >>> df.query("description=='average quality'") 36.9

Note

uses samtools behind the scene

- get_stats()[source]

Return basic stats about the reads

- Returns:

dictionary with basic stats:

total_reads : number reads ignoring supplementaty and secondary reads

mapped_reads : number of mapped reads

unmapped_reads : number of unmapped

mapped_proper_pair : R1 and R2 mapped face to face

reads_duplicated: number of reads duplicated

Warning

works only for BAM files. Use

get_samtools_stats_as_df()for SAM files.

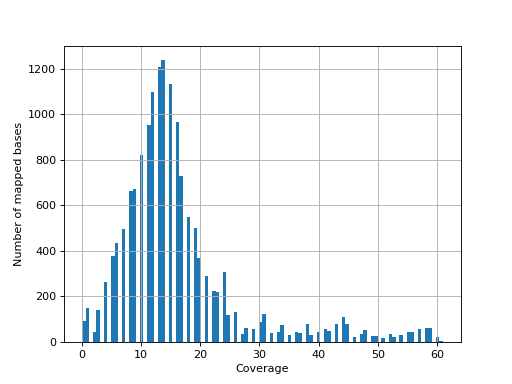

- hist_coverage(chrom=None, bins=100)[source]

from sequana import sequana_data, BAM b = BAM(sequana_data("measles.fa.sorted.bam")) b.hist_coverage()

(

Source code,png,hires.png,pdf)

- hist_soft_clipping()[source]

histogram of soft clipping length ignoring supplementary and secondary reads

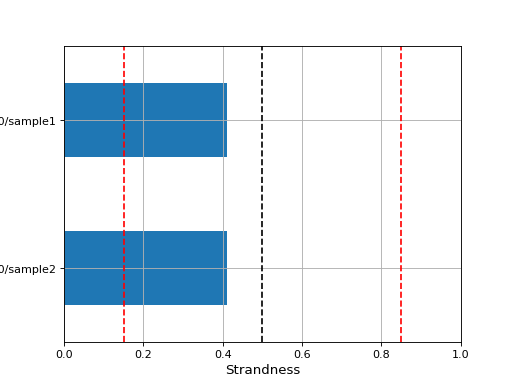

- infer_strandness(reference_bed, max_entries, mapq=30)[source]

- Parameters:

reference_bed -- a BED file (12-columns with columns 1,2,3,6 used) or GFF file (column 1, 3, 4, 5, 6 are used

mapq -- ignore alignment with mapq below 30.

max_entries -- can be long. max_entries restrict the estimate

Strandness of transcript is determined from annotation while strandness of reads is determined from alignments.

For non strand-specific RNA-seq data, strandness of reads and strandness of transcript are independent.

For strand-specific RNA-seq data, strandness of reads is determined by strandness of transcripts.

This functions returns a list of 4 values. First one indicates whether data is paired or not. Second and third one are ratio of reads explained by two types of strandness of reads vs transcripts. Last values are fractions of reads that could not be explained. The values 2 and 3 tell you whether this is a strand-specificit dataset.

If similar, it is no strand-specific. If the first value is close to 1 while the other is close to 0, this is a strand-specific dataset

- property is_paired

- property is_sorted

return True if the BAM is sorted

- mRNA_inner_distance(refbed, low_bound=-250, up_bound=250, step=5, sample_size=1000000, q_cut=30)[source]

Estimate the inner distance of mRNA pair end fragment.

from sequana import BAM, sequana_data b = BAM(sequana_data("test_hg38_chr18.bam")) df = b.mRNA_inner_distance(sequana_data("hg38_chr18.bed"))

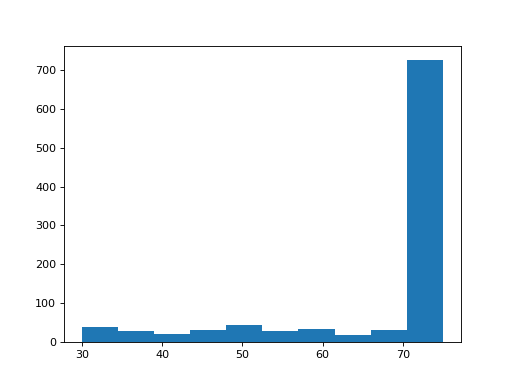

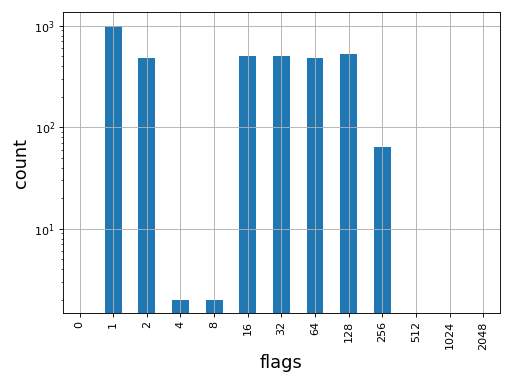

- plot_bar_flags(logy=True, fontsize=16, filename=None)[source]

Plot an histogram of the flags contained in the BAM

from sequana import BAM, sequana_data b = BAM(sequana_data('test.bam')) b.plot_bar_flags()

(

Source code,png,hires.png,pdf)

See also

SAMFlagsfor meaning of each flag

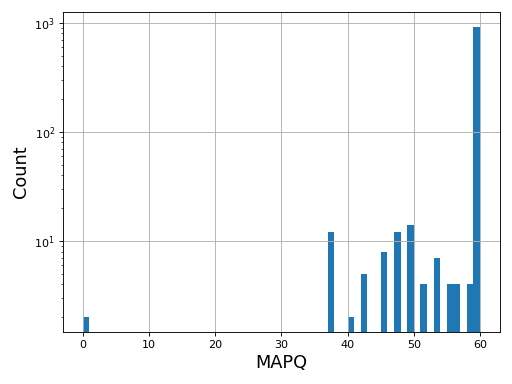

- plot_bar_mapq(fontsize=16, filename=None)[source]

Plots bar plots of the MAPQ (quality) of alignments

from sequana import BAM, sequana_data b = BAM(sequana_data('test.bam')) b.plot_bar_mapq()

(

Source code,png,hires.png,pdf)

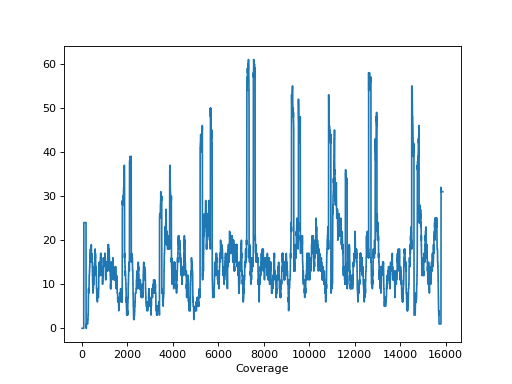

- plot_coverage(chrom=None)[source]

Please use

SequanaCoveragefor more sophisticated tools to plot the genome coveragefrom sequana import sequana_data, BAM b = BAM(sequana_data("measles.fa.sorted.bam")) b.plot_coverage()

(

Source code,png,hires.png,pdf)

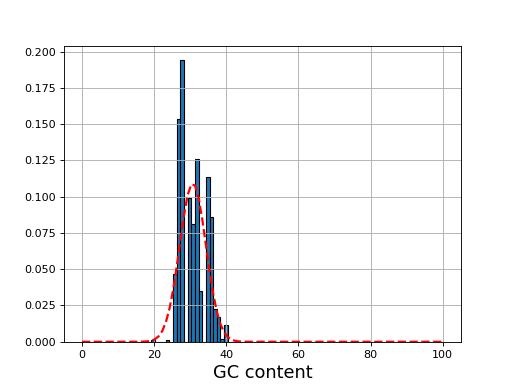

- plot_gc_content(fontsize=16, ec='k', bins=100)[source]

plot GC content histogram

- Params bins:

a value for the number of bins or an array (with a copy() method)

- Parameters:

ec -- add black contour on the bars

from sequana import BAM, sequana_data b = BAM(sequana_data('test.bam')) b.plot_gc_content()

(

Source code,png,hires.png,pdf)

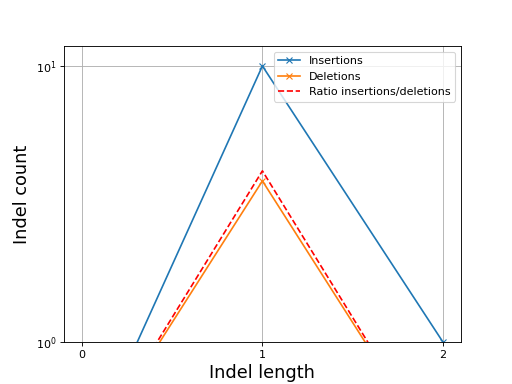

- plot_indel_dist(fontsize=16)[source]

Plot indel count (+ ratio)

- Return:

list of insertions, deletions and ratio insertion/deletion for different length starting at 1

from sequana import sequana_data, BAM b = BAM(sequana_data("measles.fa.sorted.bam")) b.plot_indel_dist()

(

Source code,png,hires.png,pdf)

What you see on this figure is the presence of 10 insertions of length 1, 1 insertion of length 2 and 3 deletions of length 1

# Note that in samtools, several insertions or deletions in a single alignment are ignored and only the first one seems to be reported. For instance 10M1I10M1I stored only 1 insertion in its report; Same comment for deletions.

Todo

speed up and handle long reads cases more effitiently by storing INDELS as histograms rather than lists

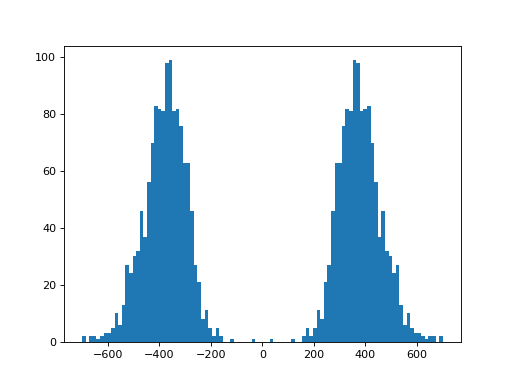

- plot_insert_size(max_entries=100000, bins=100, upper_bound=1000, lower_bound=-1000, absolute=False)[source]

This gives an idea of the insert size without taking into account any intronic gap. The mode should give a good idea of the insert size though.

(

Source code,png,hires.png,pdf)

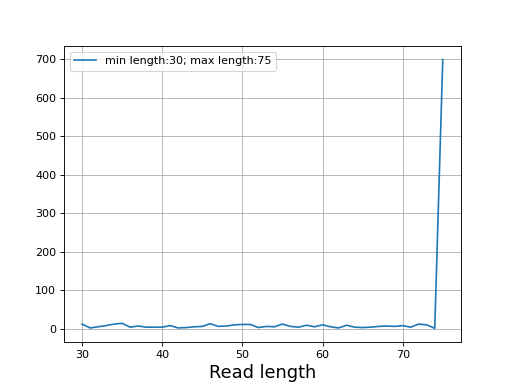

- plot_read_length()[source]

Plot occurences of aligned read lengths

from sequana import sequana_data, BAM b = BAM(sequana_data("test.bam")) b.plot_read_length()

(

Source code,png,hires.png,pdf)

- plotly_hist_read_length(log_y=False, title='', xlabel='Read length (bp)', ylabel='count', **kwargs)[source]

Histogram of the read length using plotly

- Parameters:

log_y --

title --

any additional arguments is pass to the plotly.express.hist function

- property summary

Count flags/mapq/read length in one pass.

- to_fastq(filename)[source]

Export the BAM to FastQ format

Todo

comments from original reads are not in the BAM so will be missing

Method 1 (bedtools):

bedtools bamtofastq -i JB409847.bam -fq test1.fastq

Method2 (samtools):

samtools bam2fq JB409847.bam > test2.fastq

Method3 (sequana):

from sequana import BAM BAM(filename) BAM.to_fastq("test3.fastq")

Note that the samtools method removes duplicated reads so the output is not identical to method 1 or 3.

- class SAMFlags(value=4095)[source]

Utility to extract bits from a SAM flag

>>> from sequana import SAMFlags >>> sf = SAMFlags(257) >>> sf.get_flags() [1, 256]

You can also print the bits and their description:

print(sf)

bit

Meaning/description

0

mapped segment

1

template having multiple segments in sequencing

2

each segment properly aligned according to the aligner

4

segment unmapped

8

next segment in the template unmapped

16

SEQ being reverse complemented

32

SEQ of the next segment in the template being reverse complemented

64

the first segment in the template

128

the last segment in the template

256

secondary alignment

512

not passing filters, such as platform/vendor quality controls

1024

PCR or optical duplicate

2048

supplementary alignment

11.3. Coverage (bedtools module)

Utilities for the genome coverage

- class ChromosomeCov(genomecov, chrom_name, thresholds=None, chunksize=5000000)[source]

Factory to manipulate coverage and extract region of interests.

Example:

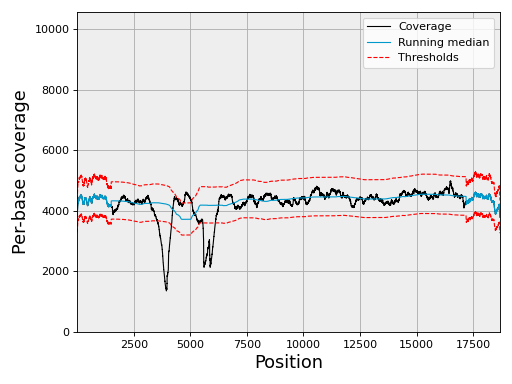

from sequana import SequanaCoverage, sequana_data filename = sequana_data("virus.bed") gencov = SequanaCoverage(filename) chrcov = gencov[0] chrcov.running_median(n=3001) chrcov.compute_zscore() chrcov.plot_coverage() df = chrcov.get_rois().get_high_rois()

(

Source code,png,hires.png,pdf)

The df variable contains a dataframe with high region of interests (over covered)

If the data is large, the input data set is split into chunk. See

chunksize, which is 5,000,000 by default.If your data is larger, then you should use the

run()method.See also

sequana_coverage standalone application

constructor

- Parameters:

df -- dataframe with position for a chromosome used within

SequanaCoverage. Must contain the following columns: ["pos", "cov"]genomecov --

chrom_name -- to save space, no need to store the chrom name in the dataframe.

thresholds -- a data structure

DoubleThresholdsthat holds the double threshold values.chunksize -- if the data is large, it is split and analysed by chunk. In such situations, you should use the

run()instead of calling the running_median and compute_zscore functions.

- property BOC

breadth of coverage

- property C3

- property C4

- property CV

The coefficient of variation (CV) is defined as sigma / mu

- property DOC

depth of coverage

- property STD

standard deviation of depth of coverage

- property bed

- compute_zscore(k=2, use_em=True, clip=4, verbose=True)[source]

Compute zscore of coverage and normalized coverage.

- Parameters:

Store the results in the

dfattribute (dataframe) with a column named zscore.Note

needs to call

running_median()before hand.

- property df

- property evenness

Return Evenness of the coverage

- Reference:

Konrad Oexle, Journal of Human Genetics 2016, Evaulation of the evenness score in NGS.

work before or after normalisation but lead to different results.

- get_centralness(threshold=3)[source]

Proportion of central (normal) genome coverage

This is 1 - (number of non normal data) / (total length)

Note

depends on the thresholds attribute being used.

Note

depends slightly on

the running median window

the running median window

- get_gc_correlation()[source]

Return the correlation between the coverage and GC content

The GC content is the one computed in

SequanaCoverage.compute_gc_content()(default window size is 101)

- get_rois()[source]

Keep positions with zscore outside of the thresholds range.

- Returns:

a dataframe from

FilteredGenomeCov

Note

depends on the

thresholdslow and high values.

- get_stats()[source]

Return basic stats about the coverage data

only "cov" column is required.

- Returns:

dictionary

- moving_average(n, circular=False)[source]

Compute moving average of the genome coverage

- Parameters:

n -- window's size. Must be odd

circular (bool) -- is the chromosome circular or not

Store the results in the

dfattribute (dataframe) with a column named ma.

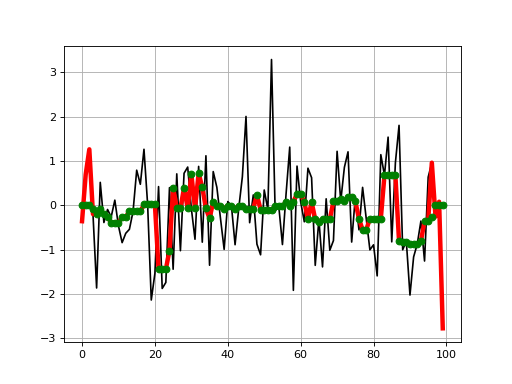

- plot_coverage(filename=None, fontsize=16, rm_lw=1, rm_color='#0099cc', rm_label='Running median', th_lw=1, th_color='r', th_ls='--', main_color='k', main_lw=1, main_kwargs={}, sample=True, set_ylimits=True, x1=None, x2=None, clf=True)[source]

Plot coverage as a function of base position.

- Parameters:

filename --

rm_lw -- line width of the running median

rm_color -- line color of the running median

rm_color -- label for the running median

th_lw -- line width of the thresholds

th_color -- line color of the thresholds

main_color -- line color of the coverage

main_lw -- line width of the coverage

sample -- if there are more than 1 000 000 points, we use an integer step to skip data points. We can still plot all points at your own risk by setting this option to False

set_ylimits -- we want to focus on the "normal" coverage ignoring unsual excess. To do so, we set the yaxis range between 0 and a maximum value. This maximum value is set to the minimum between the 10 times the mean coverage and 1.5 the maximum of the high coverage threshold curve. If you want to let the ylimits free, set this argument to False

x1 -- restrict lower x value to x1

x2 -- restrict lower x value to x2 (x2 must be greater than x1)

Note

if there are more than 1,000,000 points, we show only 1,000,000 by points. For instance for 5,000,000 points,

In addition to the coverage, the running median and coverage confidence corresponding to the lower and upper zscore thresholds are shown.

Note

uses the thresholds attribute.

- plot_gc_vs_coverage(filename=None, bins=None, Nlevels=None, fontsize=20, norm='log', ymin=0, ymax=100, contour=True, cmap='BrBG', **kwargs)[source]

Plot histogram 2D of the GC content versus coverage

- plot_hist_coverage(logx=True, logy=True, fontsize=16, N=25, fignum=1, hold=False, alpha=0.8, ec='k', filename=None, zorder=10, **kw_hist)[source]

- Parameters:

N --

ec --

- plot_hist_normalized_coverage(filename=None, binwidth=0.05, max_z=3)[source]

Barplot of the normalized coverage with gaussian fitting

- plot_hist_zscore(fontsize=16, filename=None, max_z=6, binwidth=0.5, **hist_kargs)[source]

Barplot of the zscore values

- plot_rois(x1, x2, set_ylimits=False, rois=None, fontsize=16, color_high='r', color_low='g', clf=True)[source]

- property rois

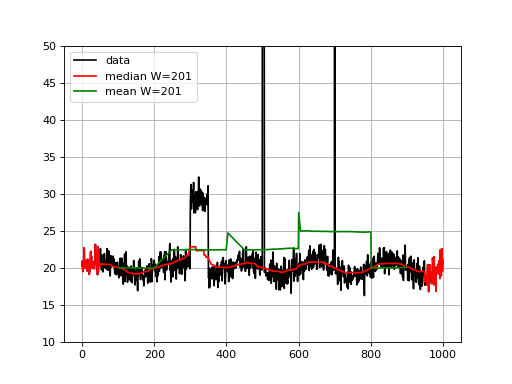

- running_median(n, circular=False)[source]

Compute running median of genome coverage

- Parameters:

Store the results in the

dfattribute (dataframe) with a column named rm.Changed in version 0.1.21: Use Pandas rolling function to speed up computation.

- class DoubleThresholds(low=-3, high=3, ldtr=0.5, hdtr=0.5)[source]

Simple structure to handle the double threshold for negative and positive sides

Used by the

SequanaCoverageand related classes.dt = DoubleThresholds(-3, 4, 0.5, 0.5)

This means the low threshold is -3 while the high threshold is 4. The two following values must be between 0 and 1 and are used to define the value of the double threshold set to half the value of the main threshold.

Internally, the main thresholds are stored in the low and high attributes. The secondary thresholds are derived from the main thresholds and the two ratios. The ratios are named ldtr and hdtr for low double threshold ratio and high double threshold ratio. The secondary thresholds are denoted low2 and high2 and are update automatically if low, high, ldtr or hdtr are changed.

- property hdtr

- property high

- property high2

- property ldtr

- property low

- property low2

- class SequanaCoverage(input_filename, annotation_file=None, low_threshold=-4, high_threshold=4, ldtr=0.5, hdtr=0.5, force=False, chunksize=5000000, quiet_progress=False, chromosome_list=[], reference_file=None, gc_window_size=101)[source]

Create a list of dataframe to hold data from a BED file generated with samtools depth.

This class can be used to plot the coverage resulting from a mapping, which is stored in BED format. The BED file may contain several chromosomes. There are handled independently and accessible as a list of

ChromosomeCovinstances.Example:

from sequana import SequanaCoverage, sequana_data filename = sequana_data('JB409847.bed') reference = sequana_data("JB409847.fasta") gencov = SequanaCoverage(filename) # you can change the thresholds: gencov.thresholds.low = -4 gencov.thresholds.high = 4 #gencov.compute_gc_content(reference) gencov = SequanaCoverage(filename) for chrom in gencov: chrom.running_median(n=3001, circular=True) chrom.compute_zscore() chrom.plot_coverage() gencov[0].plot_coverage()

Results are stored in a list of

ChromosomeCovnamedchr_list. For Prokaryotes and small genomes, this API is convenient but takes lots of memory for larger genomes.Computational time information: scanning 24,000,000 rows

constructor (scanning 40,000,000 rows): 45s

select contig of 24,000,000 rows: 1min20

running median: 16s

compute zscore: 9s

c.get_rois() ():

constructor

- Parameters:

input_filename (str) -- the input data with results of a bedtools genomecov run. This is just a 3-column file. The first column is a string (chromosome), second column is the base postion and third is the coverage.

annotation_file (str) -- annotation file of your reference (GFF3/Genbank).

low_threshold (float) -- threshold used to identify under-covered genomic region of interest (ROI). Must be negative

high_threshold (float) -- threshold used to identify over-covered genomic region of interest (ROI). Must be positive

ldtr (float) -- fraction of the low_threshold to be used to define the intermediate threshold in the double threshold method. Must be between 0 and 1.

rdtr (float) -- fraction of the low_threshold to be used to define the intermediate threshold in the double threshold method. Must be between 0 and 1.

chunksize -- size of segments to analyse. If a chromosome is larger than the chunk size, it is split into N chunks. The segments are analysed indepdently and ROIs and summary joined together. Note that GC, plotting functionalities will only plot the first chunk.

force -- some constraints are set in the code to prevent unwanted memory issues with specific data sets of parameters. Currently, by default, (i) you cannot set the threshold below 2.5 (considered as noise).

chromosome_list -- list of chromosomes to consider (names). This is useful for very large input data files (hundreds million of lines) where each chromosome can be analysed one by one. Used by the sequana_coverage standalone. The only advantage is to speed up the constructor creation and could also be used by the Snakemake implementation.

reference_file -- if provided, computes the GC content

gc_window_size (int) -- size of the GC sliding window. (default 101)

- property annotation_file

Get or set the genbank filename to annotate ROI detected with

ChromosomeCov.get_roi(). Changing the genbank filename will configure theSequanaCoverage.feature_dict.

- property circular

Get the circularity of chromosome(s). It must be a boolean.

- property feature_dict

Get the features dictionary of the genbank.

- property gc_window_size

Get or set the window size to compute the GC content.

- get_stats()[source]

Return basic statistics for each chromosome

- Returns:

dictionary with chromosome names as keys and statistics as values.

See also

Note

used in sequana_summary standalone

- property input_filename

- property reference_file

- property window_size

Get or set the window size to compute the running median. Size must be an interger.

11.4. CIGAR tools

- class Cigar(cigarstring)[source]

>>> from sequana.cigar import Cigar >>> c = Cigar("2S30M1I") >>> len(c) 33 >>> c = Cigar("1S1S1S1S") >>> c.compress() >>> c.cigarstring '4S'

Possible CIGAR types are:

"M" for alignment MATCH (0)

"I" for Insertion to the reference (1)

"D" for deletion from the reference 2

"N" for skipped region from the reference 3

"S" for soft clipping (clipped sequence present in seq) 4

"H" for hard clipping (clipped sequence NOT present in seq) 5

"P" for padding (silent deletion from padded reference)

"=" for equal

"X" for diff (sequence mismatched)

"B" for back !!!! could be also NM ???

!!! BWA MEM get_cigar_stats returns list with 11 items Last item is !!! what is the difference between M and = ??? Last item is I + S + X !!! dans BWA, mismatch (X) not provided... should be deduced from last item - I - S

Note

the length of the query sequence based on the CIGAR is calculated by adding the M, I, S, =, or X and other operations are ignored. source: https://stackoverflow.com/questions/39710796/infer-the-length-of-a-sequence-using-the-cigar/39812985#39812985

Constructor

- Parameters:

cigarstring (str) -- the CIGAR string.

Note

the input CIGAR string validity is not checked. If an unknown type is found, it is ignored generally. For instance, the length of 1S100Y is 1 since Y is not correct.

- as_dict()[source]

Return cigar types and their count

- Returns:

dictionary

Note that repeated types are added:

>>> c = Cigar('1S2M1S') >>> c.as_dict() {"S":2,"M":2}

- as_tuple()[source]

Decompose the cigar string into tuples keeping track of repeated types

- Returns:

tuple

>>> from sequana import Cigar >>> c = Cigar("1S2M1S") >>> c.as_tuple() (('S', 1), ('M', 2), ('S', 1))

- cigarstring

the CIGAR string attribute

- pattern = '(\\d+)([A-Za-z])?'

- stats()[source]

Returns number of occurence for each type found in

types>>> c = Cigar("1S2M1S") >>> c.stats() [2, 0, 0, 0, 2, 0, 0, 0, 0, 0]

- types = 'MIDNSHP=XB'

valid CIGAR types

11.5. Coverage (theoretical)

- class Coverage(N=None, L=None, G=None, a=None)[source]

Utilities related to Lander and Waterman theory

We denote

the genome length in nucleotides and

the genome length in nucleotides and  the read

length in nucleotides. These two numbers are in principle well defined since

the read

length in nucleotides. These two numbers are in principle well defined since

is defined by biology and

is defined by biology and  by the sequencing machine.

by the sequencing machine.The total number of reads sequenced during an experiment is denoted

. Therefore the total number of nucleotides is simply

. Therefore the total number of nucleotides is simply  .

.The depth of coverage (DOC) at a given nucleotide position is the number of times that a nucleotide is covered by a mapped read.

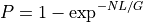

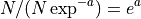

The theoretical fold-coverage is defined as :

that is the average number of times each nucleotide is expected to be sequenced (in the whole genome). The fold-coverage is often denoted

(e.g., 50X).

(e.g., 50X).In the

Coverageclass, and

and  are fixed at

the beginning. Then, if one changes

are fixed at

the beginning. Then, if one changes  , then

, then  is updated and

vice-versa so that the relation

is updated and

vice-versa so that the relation  is always true:

is always true:>>> cover = Coverage(G=1000000, L=100) >>> cover.N = 100000 # number of reads >>> cover.a # What is the mean coverage 10 >>> cover.a = 50 >>> cover.N 500000

From the equation aforementionned, and assuming the reads are uniformly distributed, we can answer a few interesting questions using probabilities.

In each chromosome, a read of length

could start at any position

(except the last position L-1). So in a genome

could start at any position

(except the last position L-1). So in a genome  with

with  chromosomes, there are

chromosomes, there are  possible starting positions.

In general

possible starting positions.

In general  so the probability that one of the

so the probability that one of the

read starts at any specific nucleotide is

read starts at any specific nucleotide is  .

.The probability that a read (of length

) covers a given

position is

) covers a given

position is  . The probability of not covering that location

is

. The probability of not covering that location

is  . For

. For  fragments, we obtain the probability

fragments, we obtain the probability

. So, the probability of covering a given

location with at least one read is :

. So, the probability of covering a given

location with at least one read is :

Since in general, N>>1, we have:

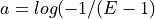

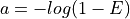

From this equation, we can derive the fold-coverage required to have e.g.,

of the genome covered:

of the genome covered:

equivalent to

The method

get_required_coverage()uses this equation. However, for numerical reason, one should not provide as an argument but (1-E).

See

as an argument but (1-E).

See get_required_coverage()Other information can also be derived using the methods

get_mean_number_contigs(),get_mean_contig_length(),get_mean_contig_length().See also

get_table()that provides a summary of all these quantities for a range of coverage.- property G

genome length

- property L

length of the reads

- property N

number of reads defined as aG/L

- property a

coverage defined as NL/G

- get_mean_number_contigs()[source]

Expected number of contigs

A binomial distribution with parameters

and

and

- get_mean_reads_per_contig()[source]

Expected number of reads per contig

Number of reads divided by expected number of contigs:

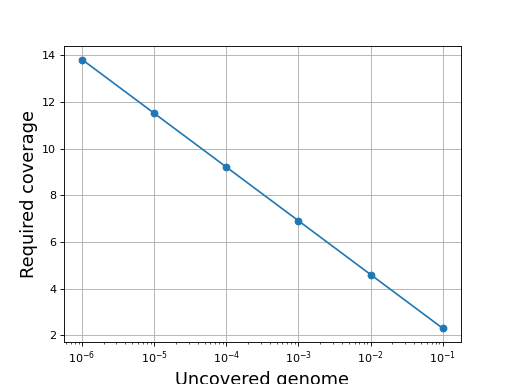

- get_required_coverage(M=0.01)[source]

Return the required coverage to ensure the genome is covered

A general question is what should be the coverage to make sure that e.g. E=99% of the genome is covered by at least a read.

The answer is:

This equation is correct but have a limitation due to floating precision. If one provides E=0.99, the answer is 4.6 but we are limited to a maximum coverage of about 36 when one provides E=0.9999999999999999 after which E is rounded to 1 on most computers. Besides, it is no convenient to enter all those numbers. A scientific notation would be better but requires to work with

instead of

instead of  .

.

So instead of asking the question what is the requested fold coverage to have 99% of the genome covered, we ask the question what is the requested fold coverage to have 1% of the genome not covered. This allows us to use

values as low as 1e-300 that is a fold coverage

as high as 690.

values as low as 1e-300 that is a fold coverage

as high as 690.- Parameters:

M (float) -- this is the fraction of the genome not covered by any reads (e.g. 0.01 for 1%). See note above.

- Returns:

the required fold coverage

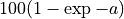

(

Source code,png,hires.png,pdf)

# The inverse equation is required fold coverage = [log(-1/(E - 1))]

11.6. Access to online database (e.g. ENA)

Utilities to access to online FASTA, taxon, lineage ...

- class ENADownload[source]

Downloader to retrieve genome fasta files from ENA amongst other things

In order to facilitate the download of FASTA files (e.g. to build a Kraken DB), this class can be used to download a bunch of FASTA files, or just one given its accession.

Some OLD pre-defined lists are available from ENA. We refer to them as virus, plasmid, phage, archaealvirus, archaea, bacteria, organelle, viroid.

Warning

the header of the FASTA files are changed to add the GI number instead of embl so th&at it can be used by our kraken builder class.

constructor

- add_gi_to_header(acc)[source]

Kraken will only accept the GI from NCBI so we need to convert the ENA accession to GI numbers

- download_fasta(filelist, output_dir=None)[source]

Download a FASTA (or list of)

- Parameters:

filelist -- a name to find on the ENA web server OR the name of an accession number or a file with accession numbers (1 column)

Warning

The filename is named after the accession without .X number If there are several variant .1, .2 the later will be used. This should not happen if the list is properly defined.

- class EUtilsTools[source]

Utilities to fetch information about accession numbers

>>> from sequana.databases import EUtilsTools >>> et = EUtilsTools() >>> et.accession_to_info("K01711.1") {'K01711.1': {'accession': '331784', 'comment': 'Measles virus (strain Edmonston), complete genome', 'gi': '331784', 'identifier': 'gi|331784|gb|K01711.1|MEANPCG[331784]', 'taxid': '11234'}}

- class NCBIDownload[source]

- class NCBITaxonReader(names=None, nodes=None)[source]

This class will help in reading, handling, simplifying NCBI taxonomic DB

When downloading NCBI taxonomy DB using e.g. Kraken, we end up with very large files. One is called names.dmp and the other nodes.dmp. They may be instrospected or simplified using this class

The names.dmp is just a CSV file. The header looks like:

1 | all | | synonym | 1 | root | | scientific name | 2 | Bacteria | Bacteria <prokaryote> | scientific name | 2 | Monera | Monera <Bacteria> | in-part | 2 | Procaryotae | Procaryotae <Bacteria> | in-part |

It is a tabulated file. If we ignore the | signs, it contains 4 columns:

taxid name unique name type of name

The unique name column is generally empty and is dropped internally. There are different types of name, so there can be several rows for a given taxid. For instance for the taxon 1, there isa scientific name and a synonym

The

df_nameis a dataframe that stores the taxid, name and type of name in a dataframe.The second file 'nodes.dmp') looks like:

1 | 1 | no rank | | 8 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | | 2 | 131567 | superkingdom | | 0 | 0 | 11 | 0 | 0 | 0 | 0 | 0 | | 6 | 335928 | genus | | 0 | 1 | 11 | 1 | 0 | 1 | 0 | 0 | | 7 | 6 | species | AC | 0 | 1 | 11 | 1 | 0 | 1 | 1 | 0 | | 9 | 32199 | species | BA | 0 | 1 | 11 | 1 | 0 | 1 | 1 | 0 | |

Again this is a tabulated file. The first three columns are taxid, parent taxid, and rank. Rank is species, genus, family, phylum, etc. Newest version of nodes.dmp hqs only 4 columns (taxid, parent taxid, rank ,a dash)

from sequana.databases import NCBITaxonReader # The first time you may want to download the taxdump files n = NCBITaxonReader() n.download_taxdump() n.init("names.dmp", "nodes.dmp") # next time, you can read it directly n.NCBITaxonReader("names.dmp", "nodes.dmp")

Constructor

- filter_names_dmp_file(output='names_filtered.dmp', taxons=[])[source]

Save a subset of nodes.dmp given list of valid taxons

- Parameters:

str -- Defaults to "nodes_filtered.dmp".

taxons (list) --

- filter_nodes_dmp_file(output='nodes_filtered.dmp', taxons=[])[source]

Save a subset of nodes.dmp given list of valid taxons

- Parameters:

str -- Defaults to "nodes_filtered.dmp".

taxons (list) --

- ftp_url = 'ftp.ncbi.nih.gov'

11.7. Enrichment

11.8. Experimental design

IEM class

- class IEM(filename)[source]

Reader and validator of IEM samplesheets

The IEM samplesheet reader and validator verifies the correctness of the sections in the samplesheet, which are case-sensitive and are enclosed within square brackets. Following the closing bracket, no additional characters are permitted, except for commas and the end-of-line marker.

The samplesheet must start with the [Header] section and end with the [Data] section, with other sections arranged arbitrarily.

The [Header] section must appear on the first line and consist of key-value pairs represented as records, with each line consisting of precisely two fields.

An optional [Settings] section can contain key-value pairs, and the [Reads] section specifies the number of cycles per read, which is exclusively required for MiSeq.

For the IEM samplesheet with adapters, the following fields must be present: [Version], [Name], [Settings], [I7], [I5], [IndexPlateLayout].

The [Data] section, which is a table similar to CSV format, is required and must be located at the end of the samplesheet file. There are no specific ordering requirements for column names, and they are not case-sensitive. At the very least, each sample must have a unique string identifier in the Sample_ID column.

Example of typical Data section to be used with bcl2fastq:

[Header] [Data] Sample_ID,Sample_Name,I7_Index_ID,index,I5_INdex_ID,index2 A10001,Sample_A,D701,AATACTCG,D501,TATAGCCT A10002,Sample_B,D702,TCCGGAGA,D501,TATAGCCT A10003,Sample_C,D703,CGCTCATT,D501,TATAGCCT A10004,Sample_D,D704,GAGATTCC,D501,TATAGCCT

Sequana Standalone

The standalone application sequana contains a subcommand based on this class:

sequana samplesheet

that can be used to check the correctness of a samplesheet:

sequana samplesheet --check SampleSheet.csv

- References:

illumina specifications 970-2017-004.pdf

- property df

Returns the [data] section

- expected_data_headers = {'PE': [], 'SE': []}

- expected_headers_fields = ['IEMFileVersion', 'Investigator Name', 'Instrument Type', 'Experiment Name', 'Date', 'Workflow', 'Application', 'Assay', 'Description', 'Chemistry', 'Index Adapters']

- expected_settings_fields = ['Adapter', 'ReverseComplement', 'CustomRead1PrimerMix']

- property header

- property index_adapters

returns index adapters

- property instrument

returns instrument name

- property name

- quick_fix(output_filename)[source]

Fix sample sheet

Tyical error is when users save the samplesheet as CSV file in excel. This may add trailing ; characters at the end of section, which raises error in bcl2fastq.

- property samples

returns the sample identifiers as a list

- property settings

- to_fasta(adapter_name='')[source]

Extract adapters from [Adapter] section and print them as a fasta file

- validate()[source]

This method checks whether the sample sheet is correctly formatted

- Checks for:

presence of ; at the end of lines indicated an edition with excel that wrongly transformed the data into a pure CSV file

inconsistent numbers of columns in the [DATA] section, which must be CSV-like section

Extra lines at the end are ignored

special characters are forbidden except - and _

checks for Sample_ID column uniqueness

checks for index uniqueness (if single index)

checks for combo of dual indices uniqueness

checks that sample names are unique

and raise a SystemExit error on the first found error.

- property version

return the version of the IEM file

11.9. FASTQ module

Utilities to manipulate FASTQ and Reads

- class FastQ(filename, verbose=False)[source]

Class to handle FastQ files

Some of the methods are based on pysam but a few are also original to sequana. In general, input can be zipped ot not and output can be zipped or not (based on the extension).

An example is the

extract_head()method:f = FastQ("input_file.fastq.gz") f.extract_head(100000, output='test.fastq') f.extract_head(100000, output='test.fastq.gz')

equivalent to:

zcat myreads.fastq.gz | head -100000 | gzip > test100k.fastq.gz

An efficient implementation to count the number of lines is also available:

f.count_lines()

or reads (assuming 4 lines per read):

f.count_reads()

Operators available:

equality ==

- extract_head(N, output_filename)[source]

Extract the heads of a FastQ files

- Parameters:

This function is convenient since it takes into account the input file being compressed or not and the output file being compressed ot not. It is in general 2-3 times faster than the equivalent unix commands combined together but is 10 times slower for the case on uncompressed input and uncompressed output.

Warning

this function extract the N first lines and does not check if there are empty lines in your FastQ/FastA files.

- filter(identifiers_list=[], min_bp=None, max_bp=None, progress=True, output_filename='filtered.fastq')[source]

Save reads in a new file if there are not in the identifier_list

- joining(pattern, output_filename)[source]

not implemented

zcat Block*.fastq.gz | gzip > combined.fastq.gz

- property n_lines

return number of lines (should be 4 times number of reads)

- property n_reads

return number of reads

- rewind()[source]

Allows to iter from the beginning without openning the file or creating a new instance.

- select_random_reads(N=None, output_filename='random.fastq', progress=True)[source]

Select random reads and save in a file

- Parameters:

If you have a pair of files, the same reads must be selected in R1 and R2.:

f1 = FastQ(file1) selection = f1.select_random_reads(N=1000) f2 = FastQ(file2) f2.select_random_reads(selection)

Changed in version 0.9.8: use list instead of set to keep integrity of paired-data

- select_reads(read_identifiers, output_filename=None, progress=True)[source]

identifiers must be the name of the read without starting @ sign and without comments.

- to_fasta(output_filename='test.fasta')[source]

Slow but works for now in pure python with input compressed data.

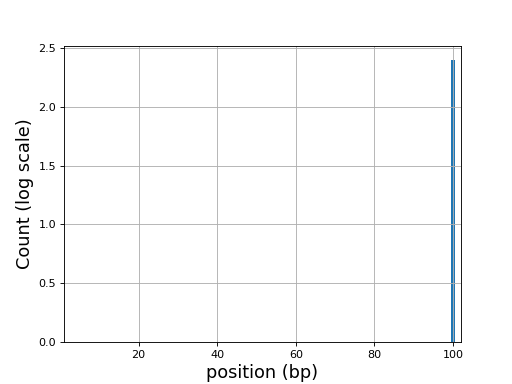

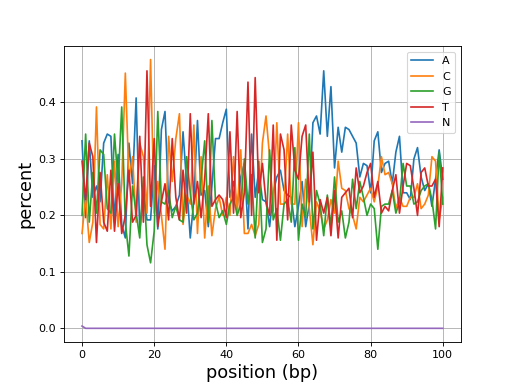

- class FastQC(filename, max_sample=500000, verbose=True, skip_nrows=0)[source]

Simple QC diagnostic

Similarly to some of the plots of FastQC tools, we scan the FastQ and generates some diagnostic plots. The interest is that we'll be able to create more advanced plots later on.

Here is an example of the boxplot quality across all bases:

from sequana import sequana_data from sequana import FastQC filename = sequana_data("test.fastq", "doc") qc = FastQC(filename) qc.boxplot_quality()

(

Source code,png,hires.png,pdf)

Warning

some plots will work for Illumina reads only right now

Note

Although all reads are parsed (e.g. to count the number of nucleotides, some information uses a limited number of reads (e.g. qualities), which is set to 500,000 by deafult.

constructor

- Parameters:

filename --

max_sample (int) -- Large files will not fit in memory. We therefore restrict the numbers of reads to be used for some of the statistics to 500,000. This also reduces the amount of time required to get a good feeling of the data quality. The entire input file is parsed tough. This is required for instance to get the number of nucleotides.

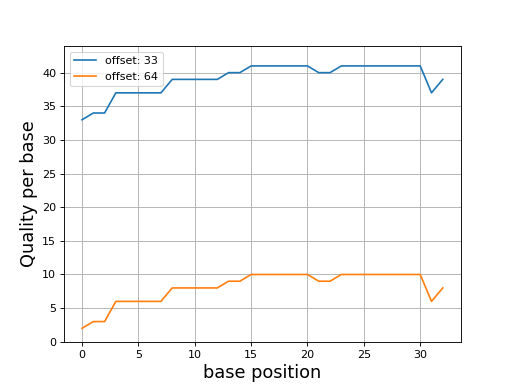

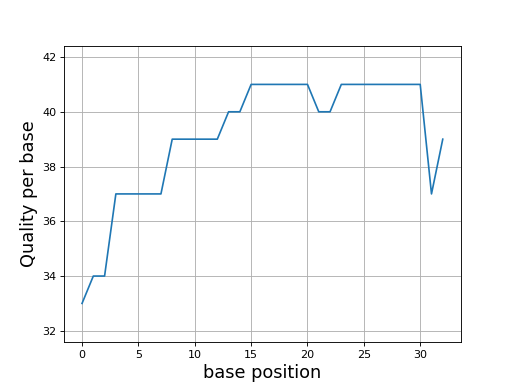

- boxplot_quality(hold=False, ax=None)[source]

Boxplot quality

Same plots as in FastQC that is average quality for all bases. In addition a 1 sigma error enveloppe is shown (yellow).

Background separate zone of good, average and bad quality (arbitrary).

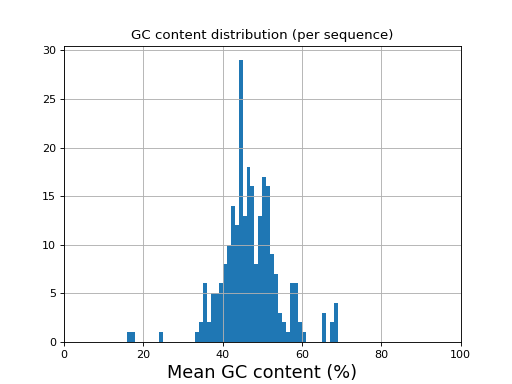

- histogram_gc_content()[source]

Plot histogram of GC content

from sequana import sequana_data from sequana import FastQC filename = sequana_data("test.fastq", "doc") qc = FastQC(filename) qc.histogram_gc_content()

(

Source code,png,hires.png,pdf)

- class Identifier(identifier, version='unknown')[source]

Class to interpret Read's identifier

Warning

Implemented for Illumina 1.8+ and 1.4 . Other cases will simply stored the identifier without interpretation

>>> from sequana import Identifier >>> ident = Identifier('@EAS139:136:FC706VJ:2:2104:15343:197393 1:Y:18:ATCACG') >>> ident.info['x_coordinate'] '2'

Currently, the following identifiers will be recognised automatically:

- Illumina_1_4:

An example is

@HWUSI-EAS100R:6:73:941:1973#0/1

- Illumina_1_8:

An example is:

@EAS139:136:FC706VJ:2:2104:15343:197393 1:Y:18:ATCACG

Other that could be implemented are NCBI

@FSRRS4401BE7HA [length=395] [gc=36.46] [flows=800] [phred_min=0] [phred_max=40] [trimmed_length=95]

Information can also be found here http://support.illumina.com/help/SequencingAnalysisWorkflow/Content/Vault/Informatics/Sequencing_Analysis/CASAVA/swSEQ_mCA_FASTQFiles.htm

11.10. FASTA module

Utilities to manipulate FastA files

- class FastA(filename, verbose=False)[source]

Class to handle FastA files

Works like an iterator:

from sequana import FastA f = FastA("test.fa") read = next(f)

names and sequences can be accessed with attributes:

f.names f.sequences

- property comments

- explode(outdir='.')[source]

extract sequences from one fasta file and save them into individual files

- filter(output_filename, names_to_keep=None, names_to_exclude=None)[source]

save FastA excluding or including specific sequences

- format_contigs_denovo(output_file, len_min=500)[source]

Remove contigs with sequence length below specific threshold.

Example:

from sequana import FastA contigs = FastA("denovo_assembly.fasta") contigs.format_contigs_denovo("output.fasta", len_min=500)

- get_stats()[source]

Return a dictionary with basic statistics

N the number of contigs, the N50 and L50, the min/max/mean contig lengths and total length.

- property lengths

- property names

- save_collapsed_fasta(outfile, ctgname, width=80, comment=None)[source]

Concatenate all contigs and save results

- select_random_reads(N=None, output_filename='random.fasta')[source]

Select random reads and save in a file

- property sequences

- summary(max_contigs=-1)[source]

returns summary and print information on the stdout

This method is used when calling sequana standalone

sequana summary test.fasta

11.11. Feature counts module

feature counts related tools

- class FeatureCount(filename, clean_sample_names=True, extra_name_rm=['_Aligned'], drop_loc=True, guess_design=False)[source]

Read a featureCounts output file.

The input file is expected to be generated with featurecounts tool. It should be a TSV file such as the following one with the header provided herebelow. Of course input BAM files can be named after your samples:

Geneid Chr Start End Strand Length WT1 WT2 WT3 KO1 KO2 KO3 gene1 NC_010602.1 141 1466 + 1326 11 20 15 13 17 17 gene2 NC_010602.1 1713 2831 + 1119 35 54 58 34 53 46 gene3 NC_010602.1 2831 3934 + 1104 9 16 16 4 18 18

from sequana import FeatureCount fc = FeatureCount("all_features.out", extra_name_rm=["_S\d+"] fc.rnadiff_df.to_csv("fc.csv")

Constructor

Get the featureCounts output as a pandas DataFrame

- Parameters:

- property df

- class FeatureCountMerger(pattern='*feature.out', fof=[], skiprows=1)[source]

Merge several feature counts files

- class MultiFeatureCount(rnaseq_folder='.', tolerance=0.1)[source]

Read set of feature counts using different options of strandness

from sequana import sequana_data from sequana.featurecounts import * directory = sequana_data("/rnaseq_0") ff = MultiFeatureCount(directory, 0.15) ff._get_most_probable_strand_consensus() ff.plot_strandness()

(

Source code,png,hires.png,pdf)

See also

get_most_probable_strand()for more information about the tolerance parameter and meaning of strandness.The expected data structure is

rnaseq_folder ├── sample1 │ ├── feature_counts_0 │ │ └── sample_feature.out │ ├── feature_counts_1 │ │ └── sample_feature.out │ ├── feature_counts_2 │ │ └── sample_feature.out └── sample2 ├── feature_counts_0 │ └── sample_feature.out ├── feature_counts_1 │ └── sample_feature.out ├── feature_counts_2 │ └── sample_feature.outThe new expected data structure is

new_rnaseq_output/ ├── sample1 │ └── feature_counts │ ├── 0 │ │ └── sample_feature.out │ ├── 1 │ │ └── sample_feature.out │ └── 2 │ └── sample_feature.out └── sample2 └── feature_counts ├── 0 │ └── sample_feature.out ├── 1 │ └── sample_feature.out └── 2 └── sample_feature.out

- get_most_probable_strand(filenames, tolerance, sample_name)[source]

Return most propable strand given 3 feature count files (strand of 0,1, and 2)

Return the total counts by strand from featureCount matrix folder, strandness and probable strand for a single sample (using a tolerance threshold for strandness). This assumes a single sample by featureCounts file.

- Parameters:

filenames -- a list of 3 feature counts files for a given sample corresponding to the strand 0,1,2

tolerance -- a value below 0.5

sample -- the name of the sample corresponding to the list in filenames

Possible values returned are:

0: unstranded

1: stranded

2: eversely stranded

We compute the number of counts in case 1 and 2 and compute the ratio strand as

. Then we decide

on the possible strandness with the following criteria:

. Then we decide

on the possible strandness with the following criteria:if RS < tolerance, reversely stranded

if RS in 0.5+-tolerance: unstranded.

if RS > 1-tolerance, stranded

otherwise, we cannot decided.

- get_most_probable_strand_consensus(rnaseq_folder, tolerance, sample_pattern='*/feature_counts_[012]', file_pattern='feature_counts_[012]/*_feature.out')[source]

From a sequana RNA-seq run folder get the most probable strand, based on the frequecies of counts assigned with '0', '1' or '2' type strandness (featureCounts nomenclature) across all samples.

- Parameters:

rnaseq_folder -- the main directory

tolerance -- a value in the range 0-0.5. typically 0.1 or 0.15

pattern -- the samples directory pattern

pattern_file -- the feature counts pattern

If guess is not possible given the tolerance, fills with None

Consider this tree structure:

rnaseq_folder ├── sample1 │ └── feature_counts │ ├── 0 │ │ └── sample_feature.out │ ├── 1 │ │ └── sample_feature.out │ └── 2 │ └── sample_feature.out └── sample2 └── feature_counts ├── 0 │ └── sample_feature.out ├── 1 │ └── sample_feature.out └── 2 └── sample_feature.outThen, the following command should all files and report the most probable strand (0,1,2) given the sample1 and sample2:

get_most_probable_strand_consensus("rnaseq_folder", 0.15)

This tree structure is understood automatically. If you have a different one, you can set the pattern (for samples) and pattern_files parameters.

11.12. Sequence and genomic modules

- class DNA(sequence, codons_stop=['TAA', 'TGA', 'TAG'], codons_stop_rev=['TTA', 'TCA', 'CTA'], codons_start=['ATG'], codons_start_rev=['CAT'])[source]

Simple DNA class

>>> from sequana.sequence import DNA >>> d = DNA("ACGTTTT") >>> d.reverse_complement()

Some long computations are done when setting the window size:

d.window = 100

The ORF detection has been validated agains a plasmodium 3D7 ORF file found on plasmodb.org across the 14 chromosomes.

Constructor

A sequence is just a string stored in the

sequenceattribute. It has properties related to the type of alphabet authorised.- Parameters:

sequence (str) -- May be a string of a Fasta File, in which case only the first sequence is used.

complement_in --

complement_out --

letters -- authorise letters. Used in

check()only.

Todo

use counter only once as a property

- property AT_skew

- property GC_skew

- property ORF_pos

- barplot_count_ORF_CDS_by_frame(alpha=0.5, bins=40, xlabel='Frame', ylabel='#', bar_width=0.35)[source]

- property threshold

- property type_filter

- property type_window

- property window

- class RNA(sequence)[source]

Simple RNA class

>>> from sequana.sequence import RNA >>> d = RNA("ACGUUUU") >>> d.reverse_complement()

Constructor

A sequence is just a string stored in the

sequenceattribute. It has properties related to the type of alphabet authorised.- Parameters:

sequence (str) -- May be a string of a Fasta File, in which case only the first sequence is used.

complement_in --

complement_out --

letters -- authorise letters. Used in

check()only.

Todo

use counter only once as a property

- class Repeats(filename_fasta, merge=False, name=None)[source]

Class for finding repeats in DNA or RNA linear sequences.

Computation is performed each time the

thresholdis set to a new value.from sequana import sequana_data, Repeats rr = Repeats(sequana_data("measles.fa")) rr.threshold = 4 rr.hist_length_repeats()

Note

Works with shustring package from Bioconda (April 2017)

Todo

use a specific sequence (first one by default). Others can be selected by name

Constructor

Input must be a fasta file with valid DNA or RNA characters

- Parameters:

filename_fasta (str) -- a Fasta file, only the first sequence is used !

threshold (int) -- Minimal length of repeat to output

name (str) -- if name is provided, scan the Fasta file and select the corresponding sequence. if you want to analyse all sequences, you need to use a loop by setting _header for each sequence with the sequence name found in sequence header.

Note

known problems. Header with a > character (e.g. in the comment) are left strip and only the comments is kept. Another issue is for multi-fasta where one sequence is ignored (last or first ?)

- property begin_end_repeat_position

- property df_shustring

Return dataframe with shortest unique substring length at each position shortest unique substrings are unique in the sequence and its complement Uses shustring tool

- property do_merge

- property header

get first line of fasta (needed in input shustring) and replace spaces by underscores

- hist_length_repeats(bins=20, alpha=0.5, hold=False, fontsize=12, grid=True, title='Repeat length', xlabel='Repeat length', ylabel='#', logy=True)[source]

Plots histogram of the repeat lengths

- property length

- property list_len_repeats

- property longest_shustring

- property names

- property threshold

- class Sequence(sequence, complement_in=b'ACGT', complement_out=b'TGCA', letters='ACGT')[source]

Abstract base classe for other specialised sequences such as DNA.

Sequenced is the base class for other classes such as

DNAandRNA.from sequana import Sequence s = Sequence("ACGT") s.stats() s.get_complement()

Note

You may use a Fasta file as input (see constructor)

Constructor

A sequence is just a string stored in the

sequenceattribute. It has properties related to the type of alphabet authorised.- Parameters:

Todo

use counter only once as a property

- complement()[source]

Alias to

get_complement()

- get_occurences(pattern, overlap=False)[source]

Return position of the input pattern in the sequence

>>> from sequana import Sequence >>> s = Sequence('ACGTTTTACGT') >>> s.get_occurences("ACGT") [0, 7]

- reverse()[source]

Alias to

get_reverse()

- property sequence

Utilities to manipulate and find codons

- class Codon[source]

Utilities to manipulate codons

The codon contains hard-coded set of start and stop codons (bacteria) for strand plus and minus. Adapt to your needs for other organisms. Based on the scan of Methanothermobacter thermautotrophicus bacteria.

from sequana import Codon c = Codon() c.start_codons['+']

- codons = {'start': {'+': frozenset({'ATG', 'GTG', 'TTG'}), '-': frozenset({'CAA', 'CAC', 'CAT'})}, 'stop': {'+': frozenset({'TAA', 'TAG', 'TGA'}), '-': frozenset({'CTA', 'TCA', 'TTA'})}}

- find_start_codon_position(sequence, position, strand, max_shift=10000)[source]

Return starting position and string of closest start codon to a given position

The starting position is on the 5-3 prime direction (see later)

The search starts at the given position, then +1 base, then -1 base, then +2, -2, +3, etc

Here, we start at position 2 (letter G), then shift by +1 and find the ATG string.

>>> from sequana import Codon >>> c = Codon() >>> c.find_start_codon_position("ATGATGATG", 2, "+") (3, 'ATG')

whereas starting at position 1, a shift or +1 (position 2 ) does not hit a start codon. Next, a shift of -1 (position 0) hits the ATG start codon.:

>>> c.find_start_codon_position("ATGATGATG", 1, "+") (3, 'ATG')

On strand -, the start codon goes from right to left. So, in the following example, the CAT start codon (reverse complement of ATG) is found at position 3. Developers must take into account a +3 shift if needed:

>>> c.find_start_codon_position("AAACAT", 3, "-") (3, 'CAT') >>> c.find_start_codon_position("AAACATCAT", 8, "-")

- find_stop_codon_position(sequence, position, strand, max_shift=10000)[source]

Return position and string of closest stop codon to a given position

See

find_start_codon_position()for details.Only difference is that the search is based on stop codons rather than start codons.

>>> from sequana import Codon >>> c = Codon() >>> c.find_stop_codon_position("ATGACCCC", 2, "+") (1, 'TGA')

11.13. Kmer module

11.14. Taxonomy related (Kraken - Krona)

- class KrakenAnalysis(fastq, database, threads=4, confidence=0)[source]

Run kraken on a set of FastQ files

In order to run a Kraken analysis, we firtst need a local database. We provide a Toy example. The ToyDB is downloadable as follows ( you will need to run the following code only once):

from sequana import KrakenDownload kd = KrakenDownload() kd.download_kraken_toydb()

See also

KrakenDownloadfor more databasesThe path to the database is required to run the analysis. It has been stored in the directory ./config/sequana/kraken_toydb under Linux platforms The following code should be platform independent:

import os from sequana import sequana_config_path database = sequana_config_path + os.sep + "kraken_toydb")

Finally, we can run the analysis on the toy data set:

from sequana import sequana_data data = sequana_data("Hm2_GTGAAA_L005_R1_001.fastq.gz", "data") ka = KrakenAnalysis(data, database=database) ka.run()

This creates a file named kraken.out. It can be interpreted with

KrakenResultsConstructor

- Parameters:

fastq -- either a fastq filename or a list of 2 fastq filenames

database -- the path to a valid Kraken database

threads -- number of threads to be used by Kraken

confidence -- parameter used by kraken2

return --

- class KrakenPipeline(fastq, database, threads=4, output_directory='kraken', dbname=None, confidence=0)[source]

Used by the standalone application sequana_taxonomy

This runs Kraken on a set of FastQ files, transform the results in a format compatible for Krona, and creates a Krona HTML report.

from sequana import KrakenPipeline kt = KrakenPipeline(["R1.fastq.gz", "R2.fastq.gz"], database="krakendb") kt.run() kt.show()

Warning

We do not provide Kraken database within sequana. You may either download a database from https://ccb.jhu.edu/software/kraken/ or use this class to download a toy example that will be stored in e.g .config/sequana under Unix platforms. See

KrakenDownload.See also

We provide a standalone application of this class, which is called sequana_taxonomy and can be used within a command shell.

Constructor

- Parameters:

fastq -- either a fastq filename or a list of 2 fastq filenames

database -- the path to a valid Kraken database

threads -- number of threads to be used by Kraken

output_directory -- output filename of the Krona HTML page

dbname --

Description: internally, once Kraken has performed an analysis, reads are associated to a taxon (or not). We then find the correponding lineage and scientific names to be stored within a Krona formatted file. KtImportTex is then used to create the Krona page.

- class KrakenResults(filename='kraken.out', verbose=True)[source]

Translate Kraken results into a Krona-compatible file

If you run a kraken analysis with

KrakenAnalysis, you will end up with a file e.g. named kraken.out (by default).You could use kraken-translate but then you need extra parsing to convert into a Krona-compatible file. Here, we take the output from kraken and directly transform it to a krona-compatible file.

kraken2 uses the --use-names that needs extra parsing.

k = KrakenResults("kraken.out") k.kraken_to_krona()

Then format expected looks like:

C HISEQ:426:C5T65ACXX:5:2301:18719:16377 1 203 1:71 A:31 1:71 C HISEQ:426:C5T65ACXX:5:2301:21238:16397 1 202 1:71 A:31 1:71

Where each row corresponds to one read.

"562:13 561:4 A:31 0:1 562:3" would indicate that: the first 13 k-mers mapped to taxonomy ID #562 the next 4 k-mers mapped to taxonomy ID #561 the next 31 k-mers contained an ambiguous nucleotide the next k-mer was not in the database the last 3 k-mers mapped to taxonomy ID #562

For kraken2, format is slighlty different since it depends on paired or not. If paired,

C read1 2697049 151|151 2697049:117 |:| 0:1 2697049:116

See kraken documentation for details.

Note

a taxon of ID 1 (root) means that the read is classified but in differen domain. https://github.com/DerrickWood/kraken/issues/100

Note

This takes care of fetching taxons and the corresponding lineages from online web services.

constructor

- Parameters:

filename -- the input from KrakenAnalysis class

- boxplot_classified_vs_read_length()[source]

Show distribution of the read length grouped by classified or not

- property df

- get_taxonomy_db(ids)[source]

Retrieve taxons given a list of taxons

- Parameters:

ids (list) -- list of taxons as strings or integers. Could also be a single string or a single integer

- Returns:

a dataframe

Note

the first call first loads all taxons in memory and takes a few seconds but subsequent calls are much faster

- histo_classified_vs_read_length()[source]

Show distribution of the read length grouped by classified or not

- kraken_to_krona(output_filename=None, nofile=False)[source]

- Returns:

status: True is everything went fine otherwise False

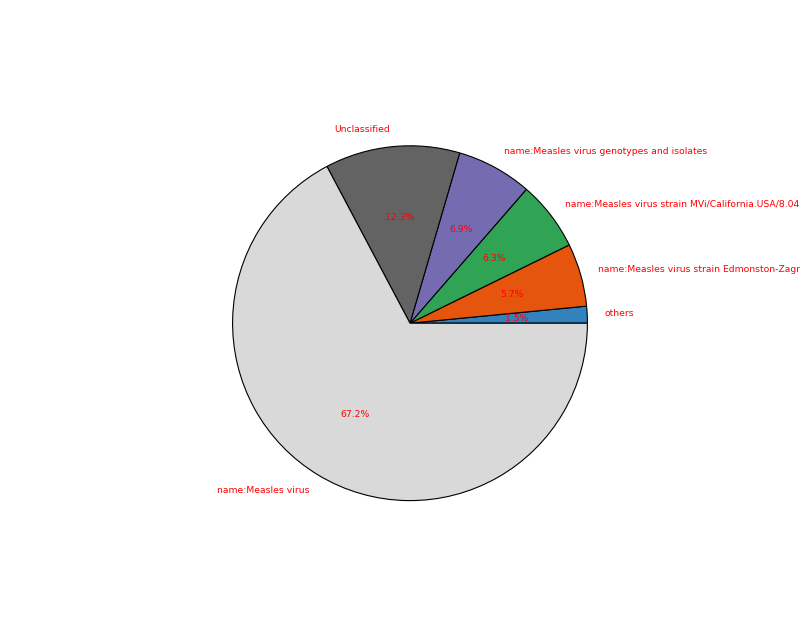

- plot(kind='pie', cmap='tab20c', threshold=1, radius=0.9, textcolor='red', delete_krona_file=False, **kargs)[source]

A simple non-interactive plot of taxons

- Returns:

None if no taxon were found and a dataframe otherwise

A Krona Javascript output is also available in

kraken_to_krona()from sequana import KrakenResults, sequana_data test_file = sequana_data("kraken.out", "doc") k = KrakenResults(test_file) df = k.plot(kind='pie')

(

Source code,png,hires.png,pdf)

See also

to generate the data see

KrakenPipelineor the standalone application sequana_taxonomy.Todo

For a future release, we could use this kind of plot https://stackoverflow.com/questions/57720935/how-to-use-correct-cmap-colors-in-nested-pie-chart-in-matplotlib

- plot2(kind='pie', fontsize=12)[source]

This is the simplified static krona-like plot included in HTML reports

- property taxons

- class KrakenSequential(filename_fastq, fof_databases, threads=1, output_directory='./kraken_sequential/', keep_temp_files=False, output_filename_unclassified=None, output_filename_classified=None, force=False, confidence=0)[source]

Kraken Sequential Analysis

This runs Kraken on a FastQ file with multiple k-mer databases in a sequencial way way. Unclassified sequences with the first database are input for the second, and so on.

The input may be a single FastQ file or paired, gzipped or not. FastA are also accepted.

constructor

- Parameters:

filename_fastq -- FastQ file to analyse

fof_databases -- file that contains a list of databases paths (one per line). The order is important. Note that you may also provide a list of datab ase paths.

threads -- number of threads to be used by Kraken

output_directory -- name of the output directory

keep_temp_files -- bool, if True, will keep intermediate files from each Kraken analysis, and save html report at each step

force (bool) -- if the output directory already exists, the instanciation fails so that the existing data is not overrwritten. If you wish to overwrite the existing directory, set this parameter to iTrue.

- run(dbname='multiple', output_prefix='kraken_final')[source]

Run the sequential analysis

- Parameters:

dbname --

output_prefix --

- Returns:

dictionary summarizing the databases names and classified/unclassied

This method does not return anything creates a set of files:

kraken_final.out

krona_final.html

kraken.png (pie plot of the classified/unclassified reads)

Note

the databases are run in the order provided in the constructor.

- class MultiKrakenResults(filenames, sample_names=None)[source]

Select several kraken output and creates summary plots

import glob mkr = MultiKrakenResults(glob.glob("*/*/kraken.csv")) mkr.plot_stacked_hist()

- class MultiKrakenResults2(filenames, sample_names=None)[source]

Select several kraken output and creates summary plots

import glob mkr = MultiKrakenResults2(glob.glob("*/*/summary.json")) mkr.plot_stacked_hist()

- plot_stacked_hist(output_filename=None, dpi=200, kind='barh', fontsize=10, edgecolor='k', lw=2, width=1, ytick_fontsize=10, max_labels=50, alpha=0.8, colors=None, cmap='viridis', sorting_method='sample_name', max_sample_name_length=30)[source]

Summary plot of reads classified.

- Parameters:

sorting_method -- only by sample name for now

cmap -- a valid matplotlib colormap. viridis is the default sequana colormap.

if you prefer to use a colormap, you can use:

from matplotlib import cm cm = matplotlib.colormap colors = [cm.get_cmap(cmap)(x) for x in pylab.linspace(0.2, 1, L)]

- class NCBITaxonomy(names, nodes)[source]

- Parameters:

names -- can be a local file or URL

nodes -- can be a local file or URL

- class Taxonomy(*args, **kwargs)[source]

This class should ease the retrieval and manipulation of Taxons

There are many resources to retrieve information about a Taxon. For instance, from BioServices, one can use UniProt, Ensembl, or EUtils. This is convenient to retrieve a Taxon (see

fetch_by_name()andfetch_by_id()that rely on Ensembl). However, you can also download a flat file from EBI ftp server, which stores a set or records (2.8M (april 2020).Note that the Ensembl database does not seem to be as up to date as the flat files but entries contain more information.

for instance taxon 2 is in the flat file but not available through the

fetch_by_id(), which uses ensembl.So, you may access to a taxon in 2 different ways getting differnt dictionary. However, 3 keys are common (id, parent, scientific_name)

>>> t = taxonomy.Taxonomy() >>> t.fetch_by_id(9606) # Get a dictionary from Ensembl >>> t.records[9606] # or just try with the get >>> t[9606] >>> t.get_lineage(9606)

Possible ranks are various. You may have biotype, clade, etc ub generally speaking ranks are about lineage. For a given rank, e.g. kingdom, you may have sub division such as superkingdom and subkingdom. order has even more subdivisions (infra, parv, sub, super)

Since version 0.8.3 we use NCBI that is updated more often than the ebi ftp according to their README. ftp://ncbi.nlm.nih.gov/pub/taxonomy/ We use Ensembl to retrieve various information regarding taxons.

constructor

- Parameters:

offline -- if you do not have internet, the connction to Ensembl may hang for a while and fail. If so, set offline to True

from -- download taxonomy databases from ncbi

- download_taxonomic_file(overwrite=False)[source]

Loads entire flat file from NCBI

Do not overwrite the file by default.

- fetch_by_id(taxon)[source]

Search for a taxon by identifier

:return; a dictionary.

>>> ret = s.search_by_id('10090') >>> ret['name'] 'Mus Musculus'

- fetch_by_name(name)[source]

Search a taxon by its name.

- Parameters:

name (str) -- name of an organism. SQL cards possible e.g., _ and % characters.

- Returns:

a list of possible matches. Each item being a dictionary.

>>> ret = s.search_by_name('Mus Musculus') >>> ret[0]['id'] 10090

- get_lineage(taxon)[source]

Get lineage of a taxon

- Parameters:

taxon (int) -- a known taxon

- Returns:

list containing the lineage

11.15. Pacbio module

Pacbio QC and stats

- class BAMSimul(filename)[source]

BAM reader for Pacbio simulated reads (PBsim)

A summary of the data is stored in the attribute

df. It contains information such as the length of the reads, the ACGT content, the GC content.Constructor

- Parameters:

filename (str) -- filename of the input pacbio BAM file. The content of the BAM file is not the ouput of a mapper. Instead, it is the output of a Pacbio (Sequel) sequencing (e.g., subreads).

- property df

- filter_length(output_filename, threshold_min=0, threshold_max=inf)[source]

Select and Write reads within a given range

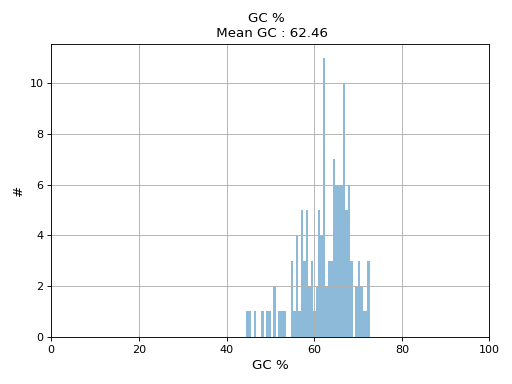

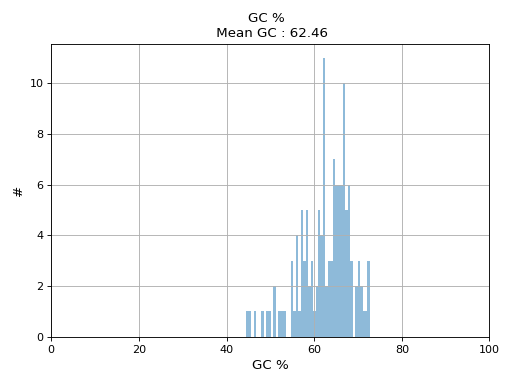

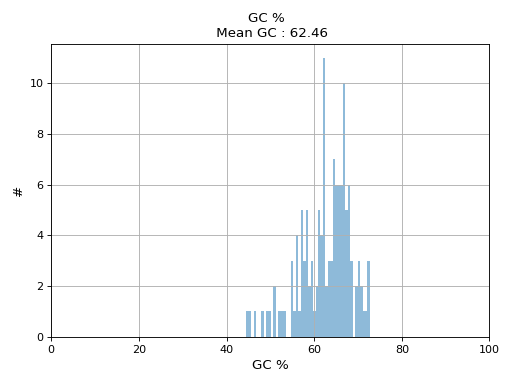

- hist_GC(bins=50, alpha=0.5, hold=False, fontsize=12, grid=True, xlabel='GC %', ylabel='#', label='', title=None)

Plot histogram GC content

- Parameters:

from sequana.pacbio import PacbioSubreads from sequana import sequana_data b = PacbioSubreads(sequana_data("test_pacbio_subreads.bam")) b.hist_GC()

(

Source code,png,hires.png,pdf)

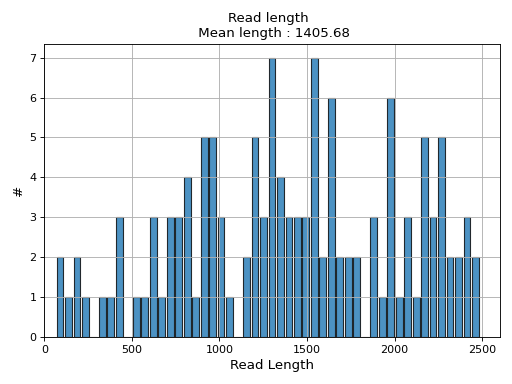

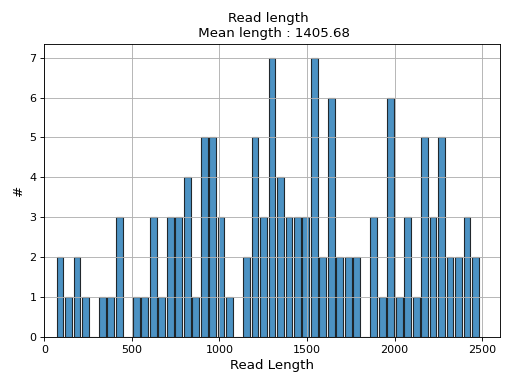

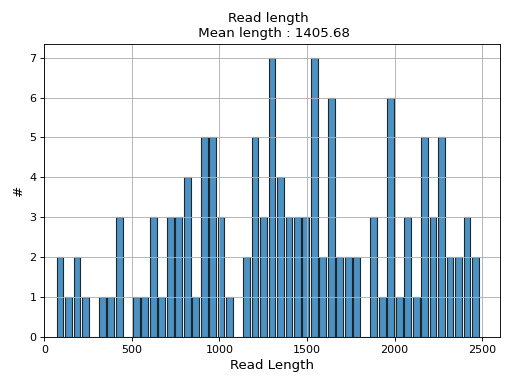

- hist_read_length(bins=50, alpha=0.8, hold=False, fontsize=12, grid=True, xlabel='Read Length', ylabel='#', label='', title=None, logy=False, ec='k', hist_kwargs={})

Plot histogram Read length

- Parameters:

from sequana.pacbio import PacbioSubreads from sequana import sequana_data b = PacbioSubreads(sequana_data("test_pacbio_subreads.bam")) b.hist_read_length()

(

Source code,png,hires.png,pdf)

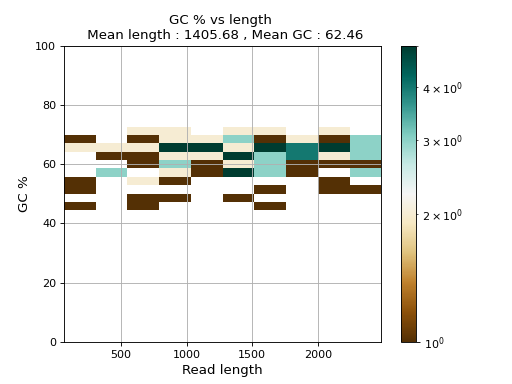

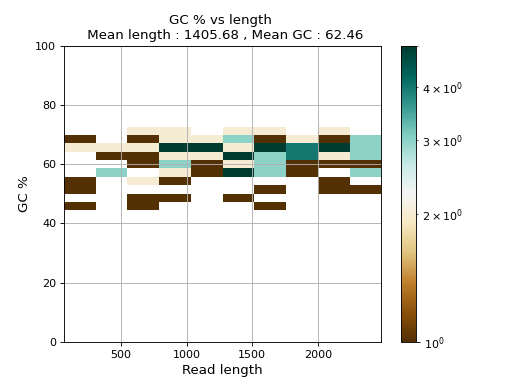

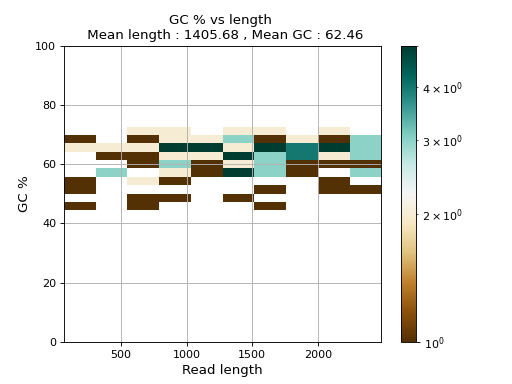

- plot_GC_read_len(hold=False, fontsize=12, bins=[200, 60], grid=True, xlabel='GC %', ylabel='#', cmap='BrBG')

Plot GC content versus read length

- Parameters:

from sequana.pacbio import PacbioSubreads from sequana import sequana_data b = PacbioSubreads(sequana_data("test_pacbio_subreads.bam")) b.plot_GC_read_len(bins=[10, 10])

(

Source code,png,hires.png,pdf)

- reset()

- to_fasta(output_filename, threads=2)

Export BAM reads into a Fasta file

- Parameters:

output_filename -- name of the output file (use .fasta extension)

threads (int) -- number of threads to use

Note

this executes a shell command based on samtools

Warning

this takes a few minutes for 500,000 reads

- to_fastq(output_filename, threads=2)

Export BAM reads into FastQ file

- class Barcoding(filename)[source]

Read as input a file created by smrtlink that stores statistics about each barcode. This is a simple CSV file with one line per barcode<

- hist_polymerase_per_barcode(bins=10, fontsize=12)[source]

histogram of number of polymerase per barcode

Cumulative histogram gives total number of polymerase reads

- class PBSim(input_bam, simul_bam)[source]

Filter an input BAM (simulated with pbsim) so as so keep reads that fit a target distribution.

This uses a MH algorithm behind the scene.

ss = pacbio.PBSim("test10X.bam") clf(); ss.run(bins=100, step=50)

For example, to simulate data set, use:

pbsim --data-type CLR --accuracy-min 0.85 --depth 20 --length-mean 8000 --length-sd 800 reference.fasta --model_qc model_qc_clr

The file model_qc_clr can be retrieved from the github here below.

See https://github.com/pfaucon/PBSIM-PacBio-Simulator for details.

We get a fastq file where simulated read sequences are randomly sampled from the reference sequence ("reference.fasta") and differences (errors) of the sampled reads are introduced.

The Fastq can be converted to

- class PacbioMappedBAM(filename, method)[source]

- Parameters:

filename (str) -- input BAM file

- filter_mapq(output_filename, threshold_min=0, threshold_max=255)[source]

Select and Write reads within a given range

- hist_GC(bins=50, alpha=0.5, hold=False, fontsize=12, grid=True, xlabel='GC %', ylabel='#', label='', title=None)

Plot histogram GC content

- Parameters:

from sequana.pacbio import PacbioSubreads from sequana import sequana_data b = PacbioSubreads(sequana_data("test_pacbio_subreads.bam")) b.hist_GC()

(

Source code,png,hires.png,pdf)

- hist_concordance(bins=100, fontsize=16)[source]

formula : 1 - (in + del + mismatch / (in + del + mismatch + match) )

For BWA and BLASR, the get_cigar_stats are different !!! BWA for instance has no X stored while Pacbio forbids the use of the M (CMATCH) tag. Instead, it uses X (CDIFF) and = (CEQUAL) characters.

Subread Accuracy: The post-mapping accuracy of the basecalls. Formula: [1 - (errors/subread length)], where errors = number of deletions + insertions + substitutions.

- hist_median_ccs(bins=1000, **kwargs)[source]

Group subreads by ZMW and plot median of read length for each polymerase

- hist_read_length(bins=50, alpha=0.8, hold=False, fontsize=12, grid=True, xlabel='Read Length', ylabel='#', label='', title=None, logy=False, ec='k', hist_kwargs={})

Plot histogram Read length

- Parameters:

from sequana.pacbio import PacbioSubreads from sequana import sequana_data b = PacbioSubreads(sequana_data("test_pacbio_subreads.bam")) b.hist_read_length()

(

Source code,png,hires.png,pdf)

- plot_GC_read_len(hold=False, fontsize=12, bins=[200, 60], grid=True, xlabel='GC %', ylabel='#', cmap='BrBG')

Plot GC content versus read length

- Parameters:

from sequana.pacbio import PacbioSubreads from sequana import sequana_data b = PacbioSubreads(sequana_data("test_pacbio_subreads.bam")) b.plot_GC_read_len(bins=[10, 10])

(

Source code,png,hires.png,pdf)

- reset()

- to_fasta(output_filename, threads=2)

Export BAM reads into a Fasta file

- Parameters:

output_filename -- name of the output file (use .fasta extension)

threads (int) -- number of threads to use

Note

this executes a shell command based on samtools

Warning

this takes a few minutes for 500,000 reads

- to_fastq(output_filename, threads=2)

Export BAM reads into FastQ file

- class PacbioSubreads(filename, sample=0)[source]

BAM reader for Pacbio (reads)

You can read a file as follows: